Daily Page: 2025-12-03

Brain-Wide Map

Just Princeton Neuroscience Institute and International Brain Lab

Parallel Insights and Direct Observational Mechanisms of Action

Four months after IO/QM Toolkit (whimsically aka, My Little TOE) publication, and ± twenty years after initial visit to Salk Institute mission control.

The impostor syndrome still lingers; yet TRUTH continues to blossom, regardless. OCTAGOY!

More and more, Co + Incidence is not some kind of ridiculous superstitious woo.

Planck-pixel Holodeck Hypothesis: SHEER COINCIDENCE is HARD EVIDENCE.

Via single-unit recording, using Neuropixel arrays, yada yada.

“[T]hey also use dynamical systems language to explain the mechanism of decision computation in that model.”

So, sounds like, among other details, we’ve conceptualized / mapped / visualized latent circuit dynamics of electro-chemical transformation as L1 - L6 manifolds (both retinal and cortical?). More incredibly helpful ‘handles’ for grappling with the granularity behind, beneath, between, and within the IO/QM Triple Equivalence.

The retinal summary is standard visual neuroscience; Hawkins’s L1–L6 refer to the six layers of neocortex that he believes implement prediction, reference frames, and intelligence across the entire brain.

- L1 – Photoreceptor layer: Contains rods (low-light, peripheral vision) and cones (color, high-acuity central vision). They convert light into electrical signals via phototransduction.

- L2 – Outer nuclear layer (ONL): Cell bodies of rods and cones; houses their nuclei.

- L3 – Outer plexiform layer (OPL): Synaptic connections between photoreceptors and horizontal/bipolar cells. Photoreceptor terminals form ribbon synapses with bipolar and horizontal cells.

- L4 – Inner nuclear layer (INL): Contains nuclei of bipolar, horizontal, and amacrine cells, plus Müller glial cell bodies.

- L5 – Inner plexiform layer (IPL): Synaptic zone where bipolar cells connect to ganglion cells and amacrine cells modulate these signals. Subdivided into ON and OFF sublaminae.

- L6 – Ganglion cell layer (GCL): Cell bodies of retinal ganglion cells whose axons form the optic nerve, carrying processed visual information to the brain.

These layers enable parallel ON/OFF pathways, center-surround receptive fields, and initial feature extraction (motion, direction, color) before signals reach the visual cortex. (134 words)

- Layer 1: Mostly dendrites from deeper layers and some inhibitory neurons; carries “context” and predictive signals from higher cortical areas and the thalamus.

- Layer 2/3: Pyramidal cells that integrate local information and send predictions/outputs to other cortical columns and higher areas. Key for forming object representations in the Thousand Brains model.

- Layer 4: The main input layer in sensory cortices; receives feed-forward sensory data (from thalamus in primary areas). Contains spiny stellate cells in granular cortex.

- Layer 5: Output layer that sends signals to motor areas, brainstem, and superior colliculus. In the Thousand Brains model, L5 cells also project to the basal ganglia for voting on actions.

- Layer 6: Sends feedback to the thalamus and modulates input in Layer 4. Crucial for attention and controlling what sensory information is allowed into the column.

Hawkins uses this six-layer neocortical architecture as the universal building block for his hierarchical temporal memory (HTM) and Thousand Brains Theory, not the retina.

If FEAR …

is a movement of THOUGHT …

as TIME …

Then an IO/QM Triple Equivalence is an eternal dispelling light.

“Isn’t it?” — Jiddu Krishnamurti

Andrzej Bargiel

The Human Substrate

What Third Millennium, Post-Automation Era

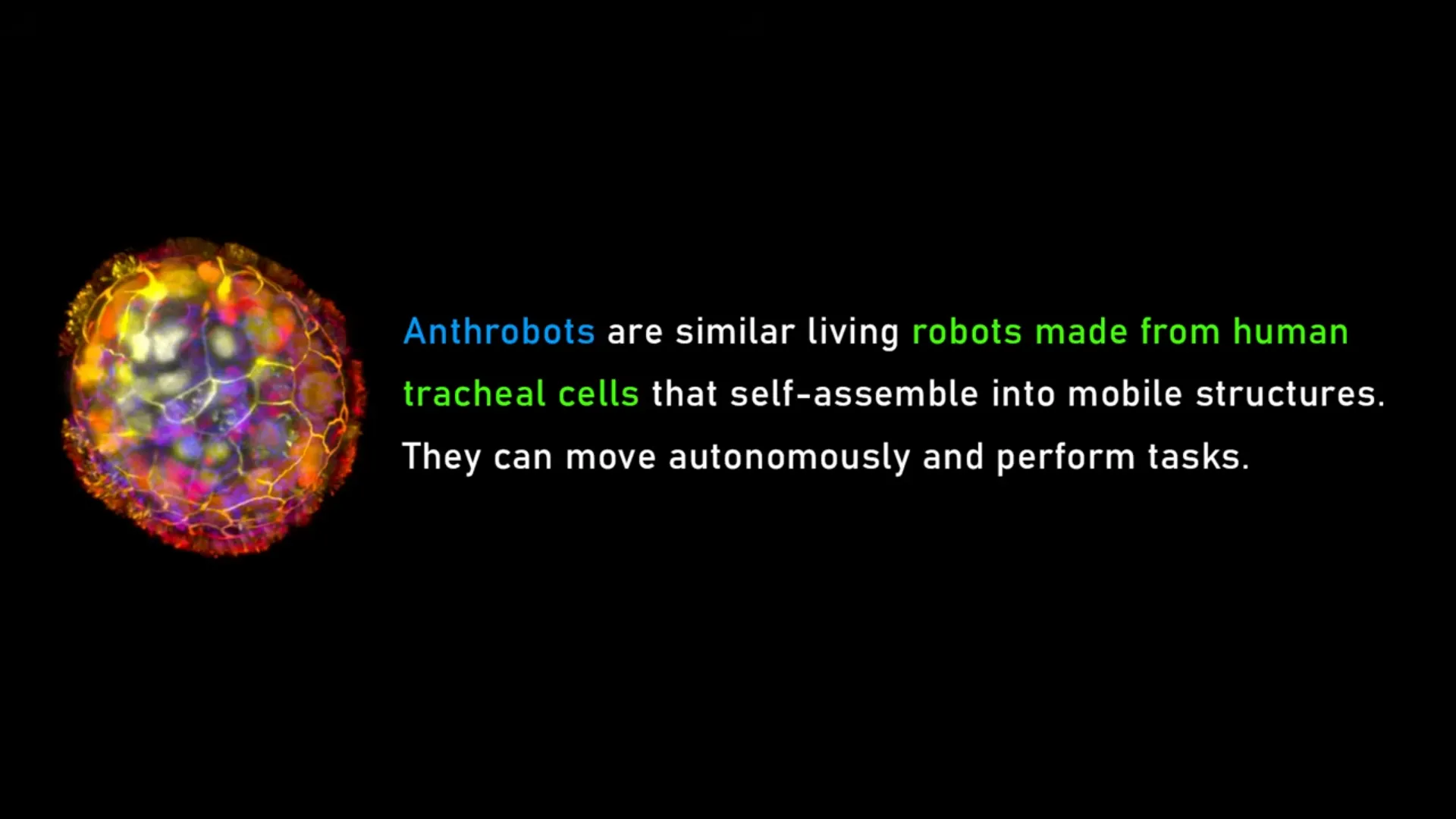

Age of Anthrobots?

”That’ll never happen in my lifetime.”

Then Welcome to the Afterlife. Again.

GNN for GNN’s, etc.

… is a text-based language designed to standardize the representation and communication of Active Inference generative models. It aims to enhance clarity, reproducibility, and interoperability in the field of Active Inference and cognitive modeling.

.webp)